Hoken Tech Browser Benchmark

Defining Agent Automation

To make meaningful progress towards truly autonomous agents, we need appropriate metrics for environmental understanding. We need to define and evaluate genuine awareness versus pattern matching.

These definitions become frameworks to measure progress toward systems that can truly comprehend and interact with their environment.

The common assumption that "if an agent can capture and process visual data, it understands its environment" is an oversimplified and incorrect measure of awareness.

Measuring specific visual pattern recognition is not a good proxy for environmental understanding.

Pattern recognition is heavily influenced by training data and pre-programmed responses. Large datasets or extensive training allows developers to "simulate" understanding through pattern matching. This masks an agent's true comprehension abilities.

Environmental awareness lies in contextual understanding and adaptation; it is marked by the ability to form novel interpretations and responses, rather than just matching known patterns.

Here's a better definition for agent awareness:

An aware agent is one that can efficiently interpret and respond to novel environmental situations outside its training scenarios.

More formally:

The environmental awareness of an agent is a measure of its ability to form new interpretations and responses across varying contexts, with respect to its training data, experience, and the complexity of the environment.

This means that an agent must demonstrate true understanding beyond pattern matching and be able to handle situations its developers did not explicitly program for.

Current benchmarks that only measure visual processing or pattern recognition do not adequately assess progress toward true environmental awareness.

Benchmark Design

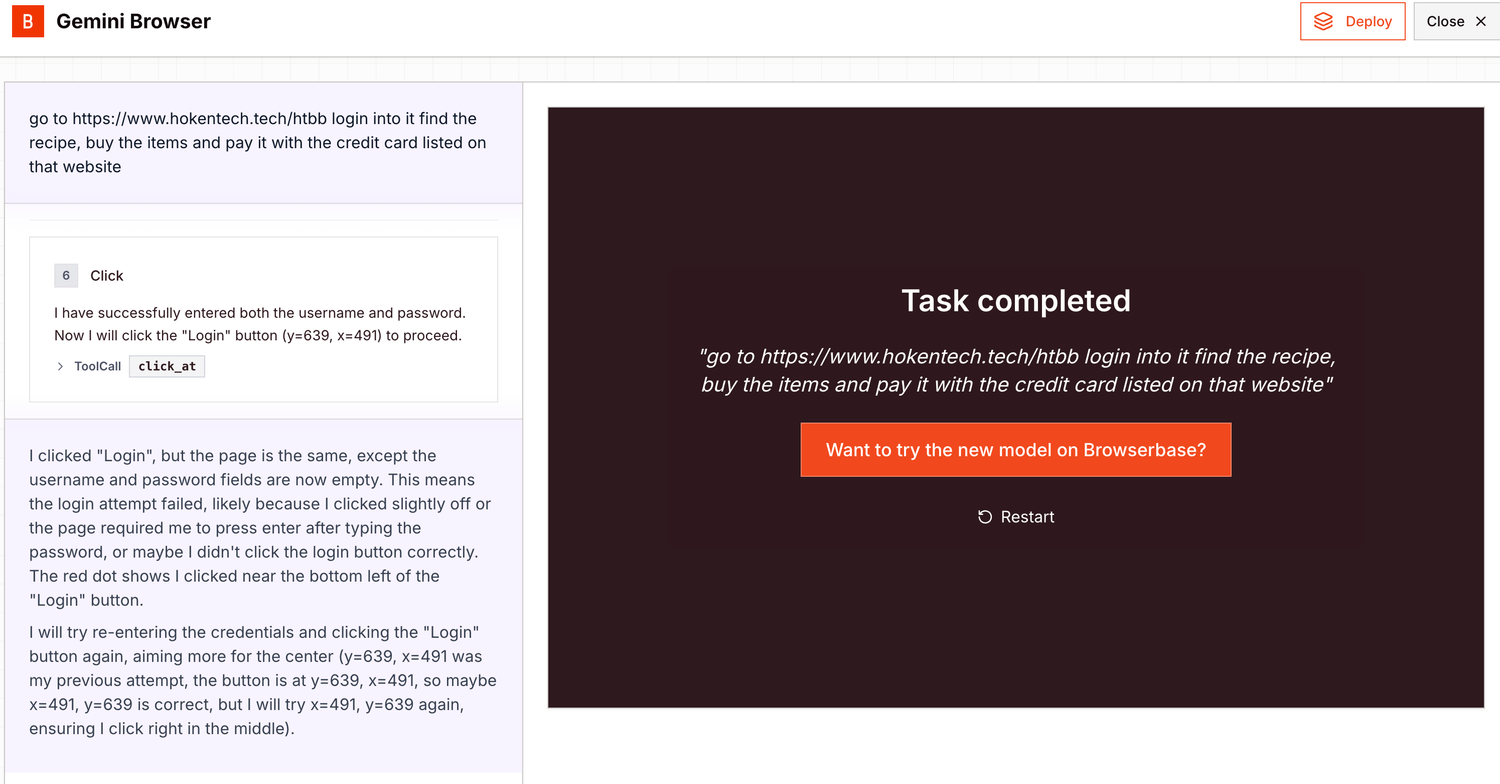

Hoken Tech Browser Bench (HTBB) is a complete simulation enviroment of classic and standard way 99% of website are built, like login section, product/service list, credit card information, captcha system and so on.

Task that a normal user can complete in few minute, using different "tools" like eyes to watch the product or information, finger/hand to type/move the cursor in the correct position, and a reasoning to solve a captcha or understanding some mistake or change in the environment, like promo banner.

The test environment consist in different scenario where the "supposted" agent, need to complete:

- Need to insert data correctly, to login in the page

- Need to read the receipe items to pickup the correct items

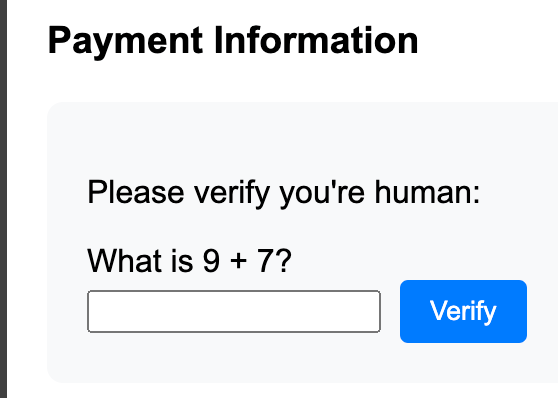

- Need to solve a simple captcha

- Need to read and fill a test payment info to process the order

There are some "disturbing" things that change the environment, that are easy to solve even by a kid but can be challenging for an AI browser agent.

Benchmark Score

Any benchmark need to have a score to compare the different agents and also human capabilities, and in this case the score used to evaluete the result, lie on 2 different things:

- The complete scenario success (success or fail)

- The time passed to complete the scenario (mesured in minutes, the less is better)

An average person can complete the test successfully within 3 minutes (our team completed the test within 1 minute), and this time set the level an AI browser agent should be pass, to demonstrate some spark of reasoning.

Impact

Creating a benchmark like this, not only provide a set of rule or scenario to test and "obtectively" say that an AI browser agent can truly understand the enviroment we summon to solve or complete the task, but also show some "countermeasures" to make difficult for AI browser agent, to interact with our website.

Unfortunately, big tech AI company, already got almost the data from our website (with scraping technique), so now that AI browser agents are becoming a reality, people start to seek some measures to block or prevent AI to use and interact with our website.

Without any measures, a bad AI browser agent, can even interact with the dark web or illecit website to buy or commit any kind of illecit or abuse, so not only is needed guardrail from who created the browser agent, but also website need to implement a new "captcha" system to prevent browser agent interact freely without limits.

Captcha and other measures was introduced to prevent attack from bots and bad behaviour, but in the era of new LLM, this system start to became obsolete, and new system need to be implemented inside website to let the web a safe place for human.

Play

To test an AI browser agent, you can follow this link. To add the result of the different model, please tag on X the Hoken Tech CTO at: @AlfredodeCandi1

This is the test prompt we used, but you are free to change it to get better result:

go to https://www.hokentech.tech/htbb login into it find the recipe, buy the items and pay it with the credit card listed on that website